Difference between revisions of "QoS"

The Wiki of Unify contains information on clients and devices, communications systems and unified communications. - Unify GmbH & Co. KG is a Trademark Licensee of Siemens AG.

(Removed a statement about PICS) |

m (→brand update, unresolved references) |

||

| (10 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | {{Breadcrumb|0|0|Glossary}} | |

| − | ''' | + | '''QoS''' = '''Q'''uality '''o'''f '''S'''ervice |

| + | |||

| + | Quality of Service refers to control mechanisms in data networks that try to ensure a certain level of performance to a data flow in accordance with requests from the application program. Data equipment tries to ensure the requested level of performance among others by performing | ||

* Call Admission Control, | * Call Admission Control, | ||

* Classification of Packets, | * Classification of Packets, | ||

| Line 16: | Line 18: | ||

== Call Admission Control == | == Call Admission Control == | ||

| + | |||

Mechanisms like Queuing and Scheduling help to priviledge high prority traffic (e.g. real-time traffic) over other data traffic. However, if the sum of all high priority flows exceed the capabilites of network links or elements, those techniques will fail. An additional high priority flow might degrade the quality of all existing flows. | Mechanisms like Queuing and Scheduling help to priviledge high prority traffic (e.g. real-time traffic) over other data traffic. However, if the sum of all high priority flows exceed the capabilites of network links or elements, those techniques will fail. An additional high priority flow might degrade the quality of all existing flows. | ||

| Line 21: | Line 24: | ||

=== Centralized Static Call Admission Control === | === Centralized Static Call Admission Control === | ||

| − | |||

| − | In the case of the | + | Unify [[OpenScape Voice]] has implemented a centralized static Call Admission Control which works in hub-and-spoke or star topologies. Topologies are abstracted to zones with unlimited bandwidth ressources which are interconnected by bottlenecks. If phone A_1 resides in zone_A only, if the bandwidth needed does not exceedand phone B_1 resides in zone_B and those zones are interconnected with a bottleneck link of 150 kbps, the call of 100 kbps is admitted, if no other call is using the bottleneck [link. Now, a second phone A_2 in zone_A wants to start an other 100 kbps call to phone B_2 in zone_B. Since the needed bandwidth would exceed the left bandwidth ressources of 50 kbps, the call will be rejected, preserving the call quality of the first call. |

| + | |||

| + | In the case of the [[OpenScape Voice]] solution, telephones are classified to be members of certain zones based on IP-addresses or telephone numbers. | ||

Cisco CallManager makes use of the very same centralized static Call Admission Control and also is limited to hub-and-spoke or star topologies. | Cisco CallManager makes use of the very same centralized static Call Admission Control and also is limited to hub-and-spoke or star topologies. | ||

=== Network based dynamic Call Admission Control === | === Network based dynamic Call Admission Control === | ||

| + | |||

A standard way of performing Call Admission Control is [http://en.wikipedia.org/wiki/Resource_Reservation_Protocol Ressource reSerVation Protocol (RSVP)], i.e. the [http://en.wikipedia.org/wiki/Intserv IntServ Model]. With RSVP, each end system that seeks to send a data flow will send an RSVP PATH message to its communication partner. Network elements along the path that are RSVP aware will add route records to the RSVP PATH messages; others will route the packets like normal IP packets. The communication parter will respond to the RSVP message with a RESV packet and will send it back along the recorded path. RSVP aware network elements now will perform the reservation, if enough bandwith is available or will indicate the failure in the RSVP RESV packet. | A standard way of performing Call Admission Control is [http://en.wikipedia.org/wiki/Resource_Reservation_Protocol Ressource reSerVation Protocol (RSVP)], i.e. the [http://en.wikipedia.org/wiki/Intserv IntServ Model]. With RSVP, each end system that seeks to send a data flow will send an RSVP PATH message to its communication partner. Network elements along the path that are RSVP aware will add route records to the RSVP PATH messages; others will route the packets like normal IP packets. The communication parter will respond to the RSVP message with a RESV packet and will send it back along the recorded path. RSVP aware network elements now will perform the reservation, if enough bandwith is available or will indicate the failure in the RSVP RESV packet. | ||

| Line 33: | Line 38: | ||

== Classification of Packets == | == Classification of Packets == | ||

| + | |||

Network elements like routers or switches classify the ingress traffic based on | Network elements like routers or switches classify the ingress traffic based on | ||

* ingress interface | * ingress interface | ||

* Information found in the frames (Layer 2) like | * Information found in the frames (Layer 2) like | ||

** 802.1p/802.1Q priority (often called COS, class of service; mostly used) | ** 802.1p/802.1Q priority (often called COS, class of service; mostly used) | ||

| − | ** L2 MAC Addresses | + | ** L2 [[MAC]] Addresses |

** VLAN ID | ** VLAN ID | ||

* Information found in the packets (Layer 3) like | * Information found in the packets (Layer 3) like | ||

| Line 50: | Line 56: | ||

=== CoS and DSCP of Voice Streams === | === CoS and DSCP of Voice Streams === | ||

| − | For voice (real time protocol, RTP) streams, | + | |

| + | For voice (real time protocol, RTP) streams, Unify phones use the DiffServ Codepoint EF, "Expedited Forwarding" (i.e. decimal value 46, binary 101110) on layer 3. | ||

Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 5 (binary 101) on layer 2. | Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 5 (binary 101) on layer 2. | ||

=== CoS and DSCP of Signaling Packets === | === CoS and DSCP of Signaling Packets === | ||

| + | |||

For signaling the DiffServ Codepoint AF31 (decimal value 26, binary 011010) is used. Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 3 (binary 011) on layer 2. | For signaling the DiffServ Codepoint AF31 (decimal value 26, binary 011010) is used. Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 3 (binary 011) on layer 2. | ||

== Class based Packet Processing == | == Class based Packet Processing == | ||

| + | |||

If an egress interface is congested, the network element needs to perform an action on packets. It needs to decide, which packets are sent with a higher priority than others, which packets will be stored and queued for later transmissions and which packte will be dropped. | If an egress interface is congested, the network element needs to perform an action on packets. It needs to decide, which packets are sent with a higher priority than others, which packets will be stored and queued for later transmissions and which packte will be dropped. | ||

=== Queuing and Scheduling === | === Queuing and Scheduling === | ||

| + | |||

In today's most network elements, two ore more packet queues exist per egress interface. Based on the traffic class of a packet, network elements will send the packet to a queue, if the egress interface is congested. The interface's scheduler chooses a packet from a queue and sends it to the egress interface. Several scheduling mechanisms exist: | In today's most network elements, two ore more packet queues exist per egress interface. Based on the traffic class of a packet, network elements will send the packet to a queue, if the egress interface is congested. The interface's scheduler chooses a packet from a queue and sends it to the egress interface. Several scheduling mechanisms exist: | ||

* priority queuing: as long as the highest priority queue is no empty, packets from this queue are sent only and all other queues need to wait. This can lead to starvation of lower priority packet flows. | * priority queuing: as long as the highest priority queue is no empty, packets from this queue are sent only and all other queues need to wait. This can lead to starvation of lower priority packet flows. | ||

| Line 75: | Line 85: | ||

=== Congestion Avoidance === | === Congestion Avoidance === | ||

| + | |||

'''Random Early Detect (RED)''' is an intelligent packet dropping mechanisms used to control TCP flows in order to avoid [http://en.wikipedia.org/wiki/TCP_global_synchronization TCP global synchronization]. | '''Random Early Detect (RED)''' is an intelligent packet dropping mechanisms used to control TCP flows in order to avoid [http://en.wikipedia.org/wiki/TCP_global_synchronization TCP global synchronization]. | ||

| Line 83: | Line 94: | ||

RED does not work with UDP, since UDP will not slow down transmission rates, when packets are dropped. | RED does not work with UDP, since UDP will not slow down transmission rates, when packets are dropped. | ||

| − | == | + | == External Links == |

| − | |||

| − | |||

| − | |||

| − | |||

* [http://en.wikipedia.org/wiki/Quality_of_service QoS on Public Wiki] | * [http://en.wikipedia.org/wiki/Quality_of_service QoS on Public Wiki] | ||

Latest revision as of 07:19, 29 April 2015

QoS = Quality of Service

Quality of Service refers to control mechanisms in data networks that try to ensure a certain level of performance to a data flow in accordance with requests from the application program. Data equipment tries to ensure the requested level of performance among others by performing

- Call Admission Control,

- Classification of Packets,

- Class based Packet processing

- Queuing and Scheduling,

- Traffic Shaping.

- Congestion Avoidance (random early detect, RED)

It is the target of Quality of Service mechanisms to minimize

- packet drops

- delay

- jitter (variable delay)

especially for high priority traffic flows.

Contents

Call Admission Control

Mechanisms like Queuing and Scheduling help to priviledge high prority traffic (e.g. real-time traffic) over other data traffic. However, if the sum of all high priority flows exceed the capabilites of network links or elements, those techniques will fail. An additional high priority flow might degrade the quality of all existing flows.

Call Admissin Control makes sure that existing flows will keep their quality by rejecting any additional high priority flow. In the terms of telephony, the call is rejected and will either receive a busy signal or is rerouted.

Centralized Static Call Admission Control

Unify OpenScape Voice has implemented a centralized static Call Admission Control which works in hub-and-spoke or star topologies. Topologies are abstracted to zones with unlimited bandwidth ressources which are interconnected by bottlenecks. If phone A_1 resides in zone_A only, if the bandwidth needed does not exceedand phone B_1 resides in zone_B and those zones are interconnected with a bottleneck link of 150 kbps, the call of 100 kbps is admitted, if no other call is using the bottleneck [link. Now, a second phone A_2 in zone_A wants to start an other 100 kbps call to phone B_2 in zone_B. Since the needed bandwidth would exceed the left bandwidth ressources of 50 kbps, the call will be rejected, preserving the call quality of the first call.

In the case of the OpenScape Voice solution, telephones are classified to be members of certain zones based on IP-addresses or telephone numbers.

Cisco CallManager makes use of the very same centralized static Call Admission Control and also is limited to hub-and-spoke or star topologies.

Network based dynamic Call Admission Control

A standard way of performing Call Admission Control is Ressource reSerVation Protocol (RSVP), i.e. the IntServ Model. With RSVP, each end system that seeks to send a data flow will send an RSVP PATH message to its communication partner. Network elements along the path that are RSVP aware will add route records to the RSVP PATH messages; others will route the packets like normal IP packets. The communication parter will respond to the RSVP message with a RESV packet and will send it back along the recorded path. RSVP aware network elements now will perform the reservation, if enough bandwith is available or will indicate the failure in the RSVP RESV packet.

RSVP has gained a bad reputation of not being scalable. However, some router vendors argue that the bad reputation is caused by a coupling of RSVP Call Admission Control features with Queuing and Scheduling. When RSVP is used for Call Admission Control only and the DiffServ Model is used for Queuing and Scheduling, scalability is restored.

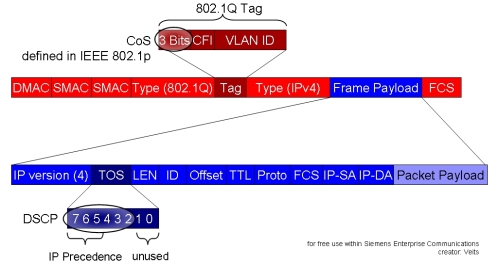

Classification of Packets

Network elements like routers or switches classify the ingress traffic based on

- ingress interface

- Information found in the frames (Layer 2) like

- 802.1p/802.1Q priority (often called COS, class of service; mostly used)

- L2 MAC Addresses

- VLAN ID

- Information found in the packets (Layer 3) like

- IP Precedence/DiffServ Codepoints (DSCP) (mostly used)

- IP Addresses

- TCP/UDP port numbers

- or higher-layer information (e.g. Cisco NBAR; usually performed at network edges only, and mapped to DiffServ Codepoints there)

The most commonly classification scheme is to use IEEE 802.1p/Q priority bits for layer 2 switching and the Type of Service/DiffServ Codepoints within the IP header for layer 3 queuing.

CoS and DSCP of Voice Streams

For voice (real time protocol, RTP) streams, Unify phones use the DiffServ Codepoint EF, "Expedited Forwarding" (i.e. decimal value 46, binary 101110) on layer 3. Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 5 (binary 101) on layer 2.

CoS and DSCP of Signaling Packets

For signaling the DiffServ Codepoint AF31 (decimal value 26, binary 011010) is used. Since most network vendors map the first 3 bits to the IEEE 802.1p/Q priority (CoS) bits, it is recommended to use CoS 3 (binary 011) on layer 2.

Class based Packet Processing

If an egress interface is congested, the network element needs to perform an action on packets. It needs to decide, which packets are sent with a higher priority than others, which packets will be stored and queued for later transmissions and which packte will be dropped.

Queuing and Scheduling

In today's most network elements, two ore more packet queues exist per egress interface. Based on the traffic class of a packet, network elements will send the packet to a queue, if the egress interface is congested. The interface's scheduler chooses a packet from a queue and sends it to the egress interface. Several scheduling mechanisms exist:

- priority queuing: as long as the highest priority queue is no empty, packets from this queue are sent only and all other queues need to wait. This can lead to starvation of lower priority packet flows.

- (weighted) round-robin: all queues are served one after the other e.g. queues 1 to 4 are served in the order 1-2-3-4-1-2-3-4 etc. If queue 1 has double weight than the other ones, it will be served like 1-1-2-3-4-1-1-2-3-4- etc. In complex scheduling mechanisms, the packet length will also be taken into account.

- (class-based) fair queuing: packets are classified based on the flow parameters, e.g. source- and destination-IP-addresses and TCP/UDP ports. Idea is to give each flow a fair access to the egress interface. In class-based fair queuing, packets are mapped to traffic classes. Between classes, e.g. weighted round-robin will be performed. Within a traffic class, fair queuing will take place.

- combinations of the above: e.g. Cisco Low Latency Queuing (LLQ) combines priority queuing for real-time applications with class-base weighted fair queuing.

Traffic Shaping

Traffic shaping is used, if transmitting packets at wire speed at one network link would lead to congestion at an other network link. Consider a QoS capable router that is connected via 100 Mbps fast ethernet to a non-QoS-aware DSL modem, which in turn can send with a maximum transmission rate of 1 Mbps. In this case, the router should slow down the transmission rate to 1 Mbps.

The advantage is, that the router can make sure, that high priority traffic will be preferred over other traffic. If the traffic is not shaped within the router, the flow will hit the DSL modem, who will drop packet randomly.

For traffic shaping, the leaky bucket algorithm is used.

Congestion Avoidance

Random Early Detect (RED) is an intelligent packet dropping mechanisms used to control TCP flows in order to avoid TCP global synchronization.

As long as there are no packet drops, TCP tends to increase the flow bandwidth. If the output buffer of a bottleneck link is filled up, without RED, packets of all TCP flows would experience packet drops (i.e. tail drops) at the same time. All TCP flows will slow down quickly, leading to a bad link usage shortly thereafter. Then TCP increases the flow bandwidth again, until the buffer is full again. This cyclic behavior is caused by TCP global synchronization.

RED will randomly drop packets, befor the buffer is full. With that, only few of the TCP flows are slowed down. With that, the bandwith usage of different TCP flows are decoupled from each other and higher sum throughput with less packet drops can be reached.

RED does not work with UDP, since UDP will not slow down transmission rates, when packets are dropped.